Fogwise® AirBox

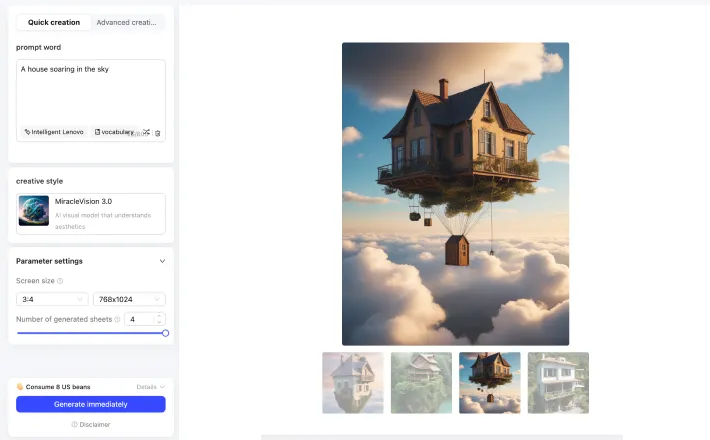

Easy to Build Your Own GPT and Stable Diffusion

Powered by SOPHON SG2300x

- Octa-core A53,

up to 2.3GHz - Support 12 channels HD

hardware encoding - Support 32 channels HD

hardware decoding - Support INT8,

FP16/BF16 and FP32 - Mainstream frameworks Support :

- Built-in 32TOPS@INT8 high-performance TPU (Tensor Processing Unit)

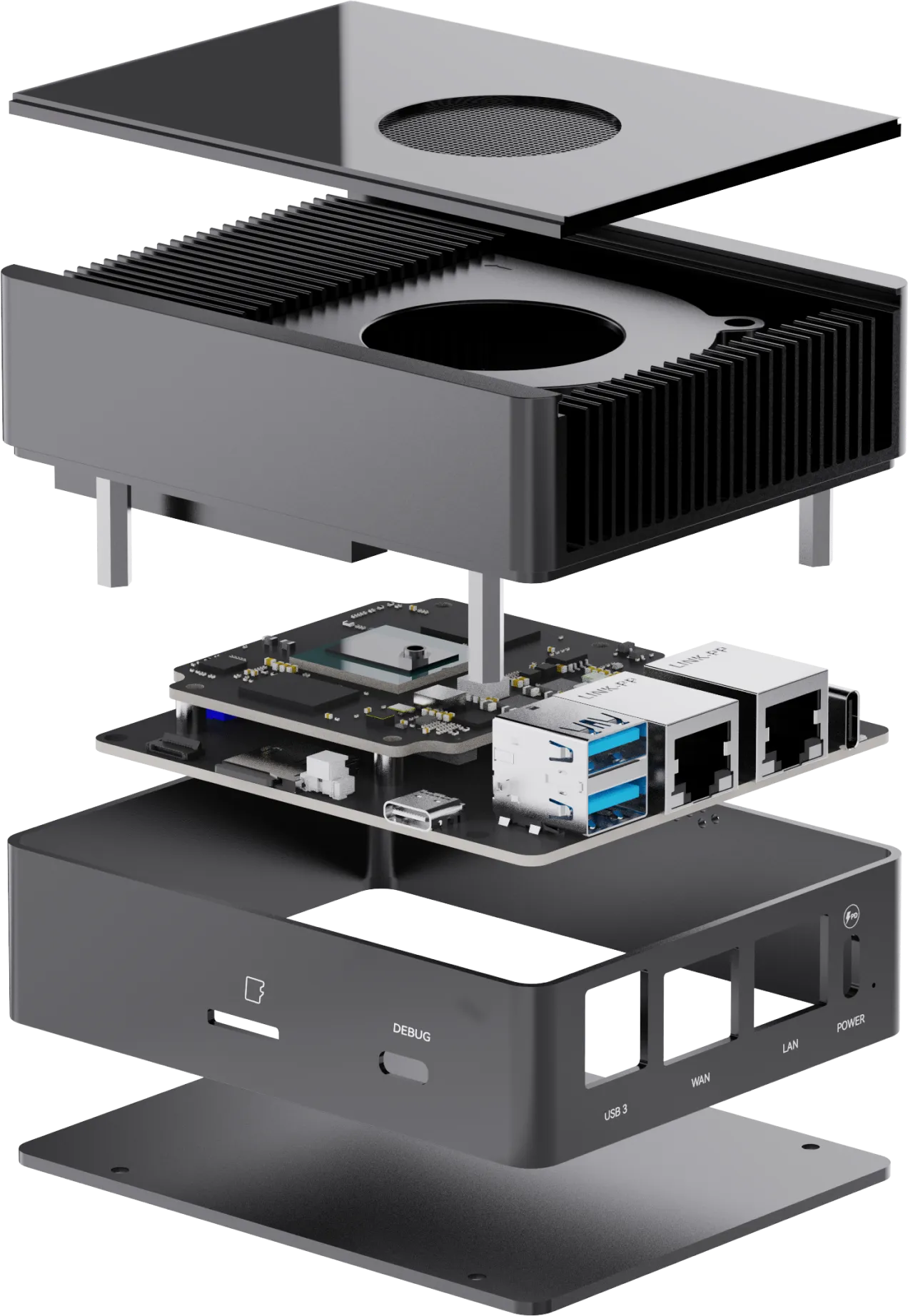

Capable of Operating as An Embedded Device in

Harsh Environments

Aluminum alloy shell, Protects equipment in harsh environments

Rugged

and Drop-proofCorrosion

ResistantHigh Thermal

Conductivity

Active heat dissipation

Active heat dissipation

PWM speed control

PWM speed control

Aluminum Alloy Case: Durable and Reliable

Easy to assemble

Easy-to-Use, Convenient and Efficient

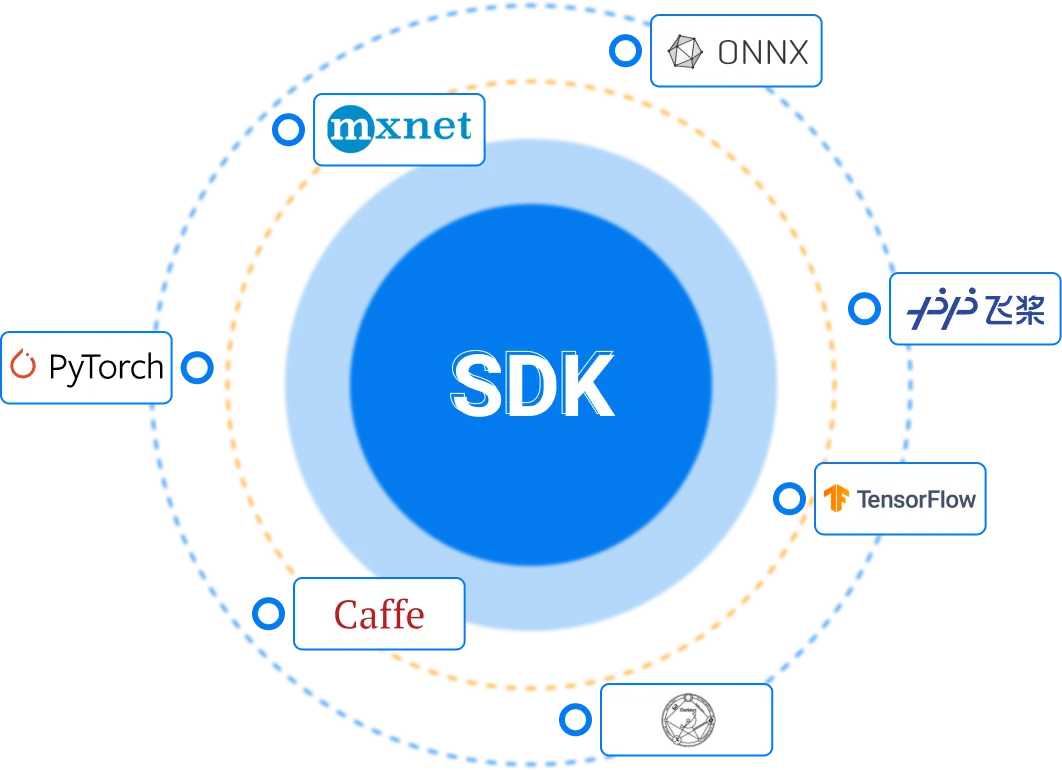

BMNNSDK one-stop toolkit provides a series of software tools including the underlying driver

environment, compiler and inference deployment tool.

The easy-to-use and convenient toolkit covers the model optimization, efficient runtime support and

other capabilities required for neural network inference.

Support 32 Channels HD Hardware Decoding

Support 32-channel 1080P@25fps HD video hard decoding

Supports Multiple Storage Devices

64GB eMMC Onboard

MicroSD Slot

M.2 M Key for NVMe SSD

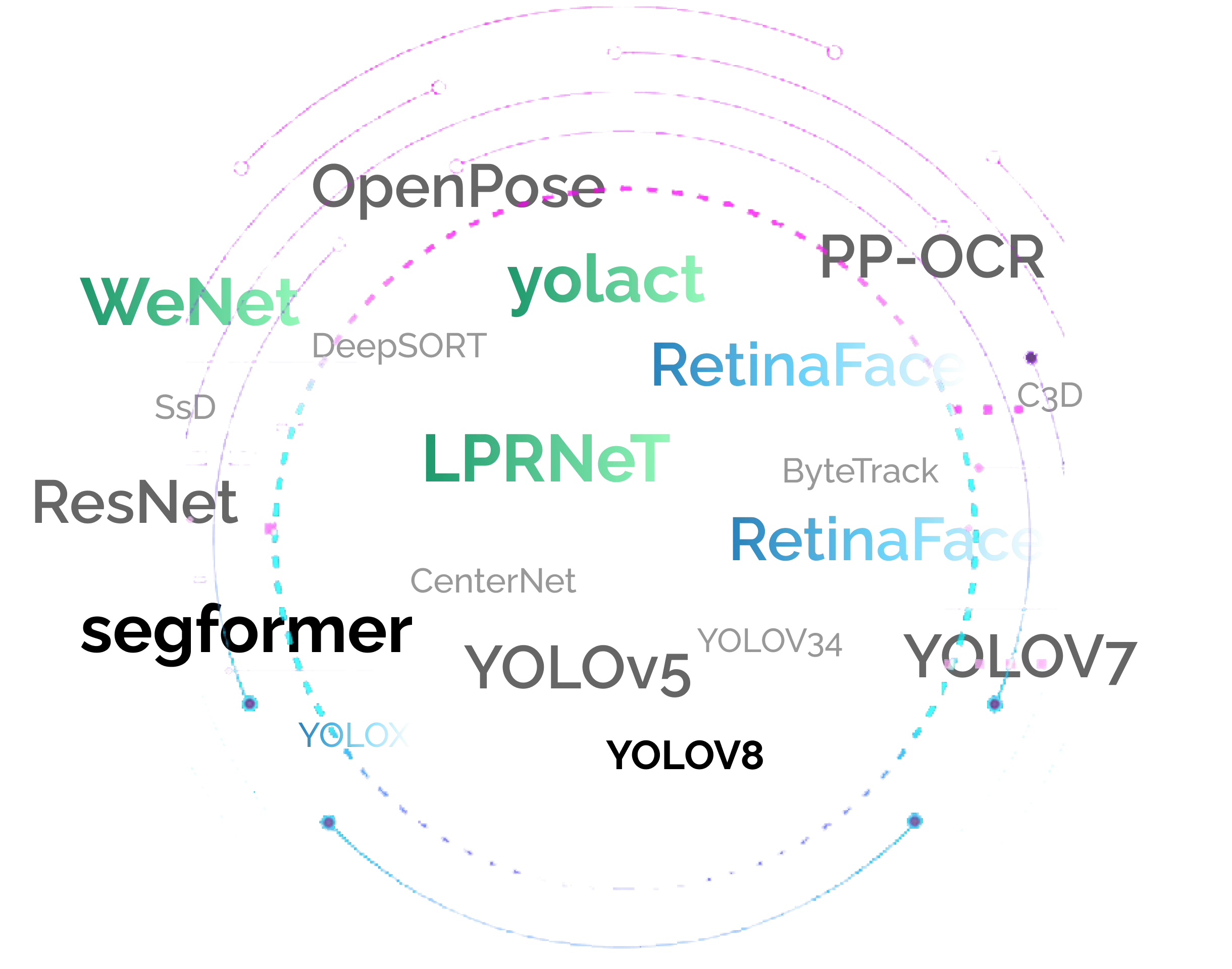

Radxa Model Zoo

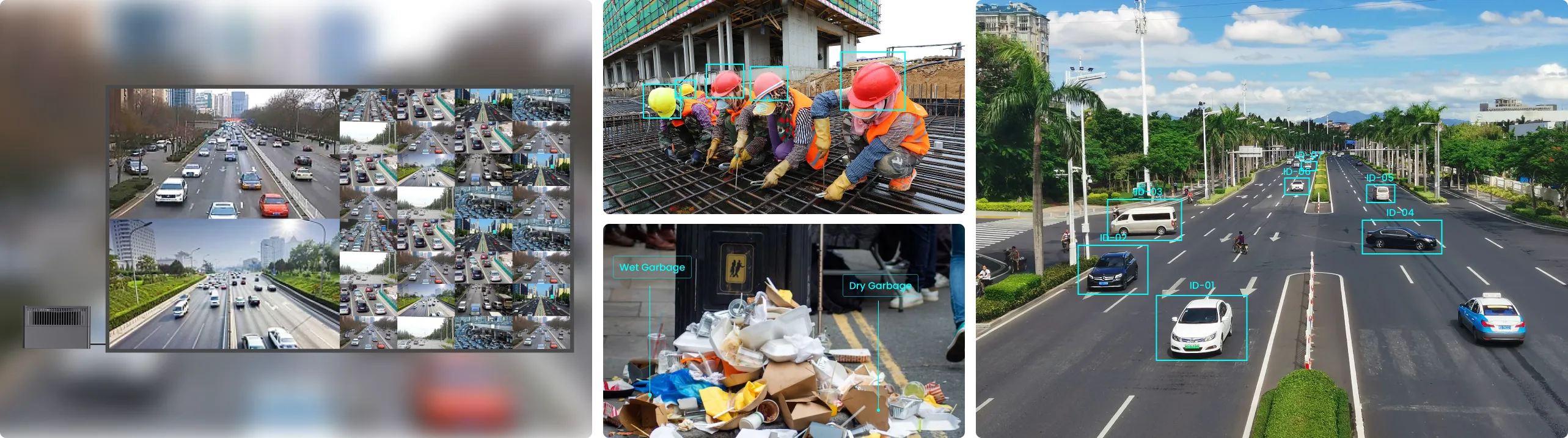

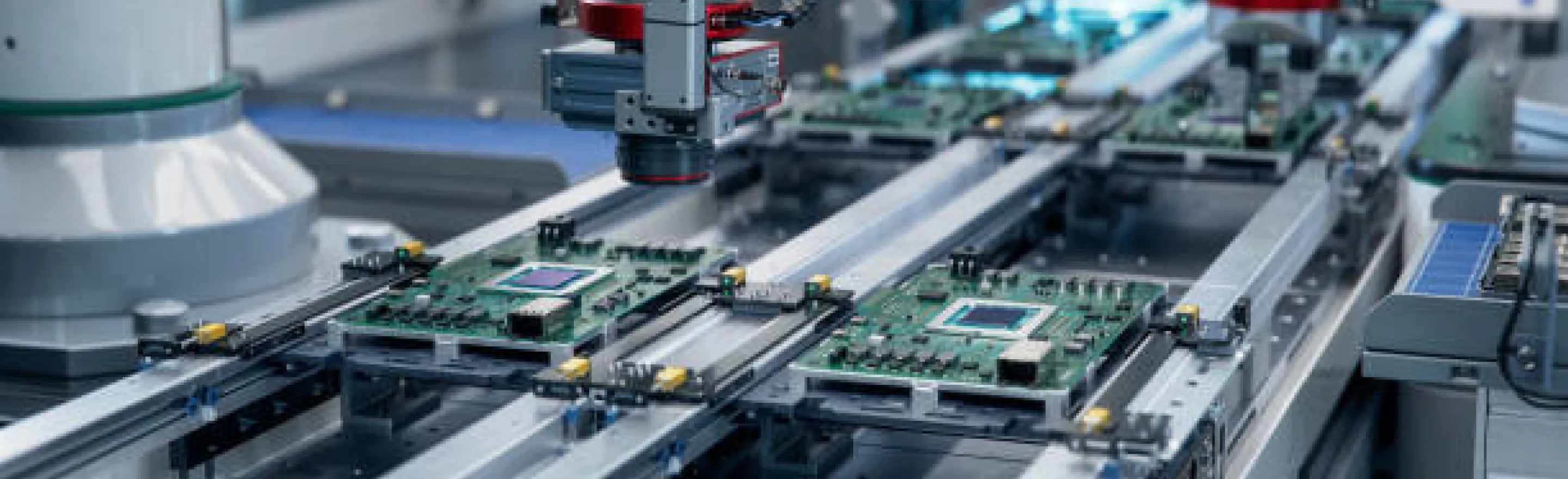

Applications

Public Security

Private GPT

Private Stable Diffusion

Smart Healthcare

Industrial AI

Fogwise® AirBox Works with

Radxa Power PD 65W

Radxa Power PD 65WCharge Smarter, Faster